Using Microservices and Containers

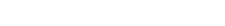

Microservices and containers have revolutionized the way CI/CD pipelines operate, improving agility and efficiency. By breaking down large applications into smaller, independent services, teams can work faster and with more flexibility. Containers package these services, ensuring consistency across various environments.

Key Benefits of Microservices and Containers:

| Benefit | Description |

| Better Fault Handling | If one service fails, the rest of the application remains operational. |

| Easier Updates | You can update or modify one microservice without affecting others. |

| Faster Development | Teams can build, test, and deploy smaller, independent components more quickly. |

Steps to Implement Microservices and Containers:

- Break down your application into smaller, manageable services.

- Select a containerization tool like Docker.

- Use orchestration tools like Kubernetes to manage your containers at scale.

Working with Serverless Systems

Serverless architectures, such as AWS Lambda, allow you to focus on writing code without worrying about managing infrastructure. This can lead to cost savings and faster deployment times.

Best Practices for Serverless Systems:

- Utilize frameworks like AWS SAM or Serverless Framework to simplify the creation and deployment of serverless applications.

- Design your applications to respond to specific events (event-driven architecture).

- Continuously monitor your serverless functions’ performance and optimize them for better efficiency.

Managing Multi-Cloud Pipelines

Multi-cloud environments introduce additional complexity into CI/CD pipelines, but they offer flexibility and resilience.

Tips for Managing Multi-Cloud Pipelines:

| Approach | Description |

| Use Cloud-Neutral Tools | Choose tools like Terraform or Jenkins that work across multiple cloud providers. |

| Unified Pipelines | Build a single CI/CD pipeline such as bitbucket pipelines or Jenkins that can deploy across different cloud platforms. |

| Monitor Performance | Implement monitoring tools to track the performance of your pipeline across clouds. |

Applying AI and ML in Orchestration

AI and machine learning are transforming CI/CD pipelines, helping to predict and prevent issues, optimize performance, and improve testing processes.

How AI and ML Can Enhance Pipelines:

- Error Prediction: AI can predict potential failures based on historical data.

- Pipeline Optimization: Machine learning can automate performance tuning, making pipelines run faster.

- Intelligent Testing: AI can identify high-risk areas of the application to prioritize testing.

Getting Started with AI/ML in CI/CD:

- Collect pipeline performance data.

- Choose an AI platform like Google Cloud AI or AWS SageMaker.

- Develop models to automate and enhance your pipeline processes.

Common Challenges in CI/CD Pipeline Orchestration

CI/CD pipelines, while powerful, come with their own set of challenges. Here are common issues and how to address them:

| Mistake | Solution |

| Insufficient Testing | Implement comprehensive testing: unit, integration, and end-to-end tests. |

| Lack of Monitoring | Use monitoring tools like Prometheus or Grafana to track pipeline health. |

| Outdated Dependencies | Automate dependency updates using tools like Dependabot or Renovate. |

| Resource Inefficiency | Optimize resources with containerization, serverless services, or cloud resources. |

| Manual Processes | Automate repetitive tasks, such as testing, building, and deploying. |

Handling Large-Scale Projects

Managing large-scale projects can be daunting, but with the right strategies, you can break down the complexity.

Strategies for Managing Large Projects:

- Break the project into smaller, modular parts.

- Design reusable pipelines to speed up development.

- Run parallel tasks to save time and improve efficiency.

- Use Git and other version control systems to track code changes effectively.

Dealing with Complex Pipelines

Complex pipelines require careful management to maintain efficiency and avoid bottlenecks.

Simplifying Complex Pipelines:

- Use pipeline visualization tools like Jenkins Blue Ocean to get a clear view of your pipeline flow.

- Break down large pipelines into smaller, manageable segments.

- Automate repetitive tasks and monitor pipeline performance regularly to identify areas for improvement.

Evaluating CI/CD Pipeline Success

Measuring the success of your CI/CD pipeline requires tracking specific key performance indicators (KPIs).

Key Performance Indicators for CI/CD Pipelines:

| Indicator | Definition |

| Pipeline Success Rate | The percentage of pipeline runs that complete without errors. |

| Pipeline Failure Rate | The frequency of pipeline failures or errors during execution. |

| Average Pipeline Duration | The average time it takes for a pipeline to complete. |

| Deployment Frequency | How often code is deployed to production environments. |

| Mean Time to Recovery (MTTR) | The average time taken to fix an issue after a failure. |

Measuring Pipeline Efficiency

Pipeline efficiency can be assessed by analyzing a few crucial metrics:

| Metric | Description |

| Cycle Time | Time taken for new code to go live. |

| Lead Time | The time from ideation to delivery. |

| Throughput | Number of new features delivered over a specified time period. |

| Work-in-Progress (WIP) | The number of tasks currently being worked on in the pipeline. |

By tracking these metrics, you can identify bottlenecks and continuously improve your CI/CD process.

Continuous Improvement of CI/CD Pipelines

To maintain and improve your CI/CD pipeline over time:

- Add more automated tests to catch issues early in the process.

- Continuously monitor pipeline performance using tools like Datadog or New Relic.

- Regularly review metrics and make data-driven decisions to optimize pipeline steps.

- Foster a culture of collaboration by encouraging team members to suggest improvements.

- Stay updated on the latest CI/CD tools and techniques to keep your pipeline cutting-edge.

What’s Next for CI/CD Pipeline Orchestration?

As the tech landscape evolves, new advancements in CI/CD pipeline orchestration are emerging.

Upcoming Technologies Impacting CI/CD:

| Technology | Impact on CI/CD |

| AI and Machine Learning | Makes pipelines smarter, automating error detection and optimization. |

| Serverless Computing | Reduces the need for managing infrastructure, enabling faster deployment. |

| Kubernetes | Enhances the management of large, complex microservice architectures. |

| DevSecOps | Integrates security practices directly into the pipeline process. |

Future Changes in CI/CD Practices

With the introduction of new technologies, CI/CD practices will continue to evolve:

| Change | What It Means |

| More Complex Pipelines | Pipelines will incorporate multiple branching paths and conditional logic. |

| Enhanced Security | Increased focus on integrating security throughout the CI/CD process. |

| Stronger Collaboration | Dev, QA, and Ops teams will work more closely for faster, seamless deployments. |

| Increased Automation | Machine learning and AI will further automate testing and deployment tasks. |

How does Atlantic BT fit in this picture?

We empower clients to leverage their existing tools like Bitbucket, Packer, and Terraform, augmented by cutting-edge AI, to create advanced CI/CD pipelines. Our approach optimizes deployment across multi-cloud environments by combining automation with intelligent insights for continuous improvement.

Key Features:

- Microservices & Containers: We break down monolithic applications into modular microservices using containers (Docker) to ensure scalability and fault tolerance. Packer automates the creation of machine images while Bitbucket Pipelines handles efficient code integration and testing.

- Infrastructure as Code (IaC): Using Terraform, we automate multi-cloud infrastructure provisioning, ensuring a consistent and repeatable deployment process across AWS, Azure, or Google Cloud.

- AI-Powered Automation: We integrate AI to predict errors and optimize performance by analyzing historical pipeline data. Machine learning models help improve test prioritization and auto-tune deployment times, making pipelines faster and more reliable.

- Smart Monitoring & Security: With AI-driven monitoring, we detect potential issues in real-time, providing actionable insights for resource optimization. Additionally, DevSecOps practices are embedded directly into the pipeline, ensuring security at every stage of the development lifecycle.

By leveraging your current tools and integrating AI, AtlanticBT helps clients achieve faster, smarter, and more resilient CI/CD pipelines, driving efficiency and agility in their software development workflows.